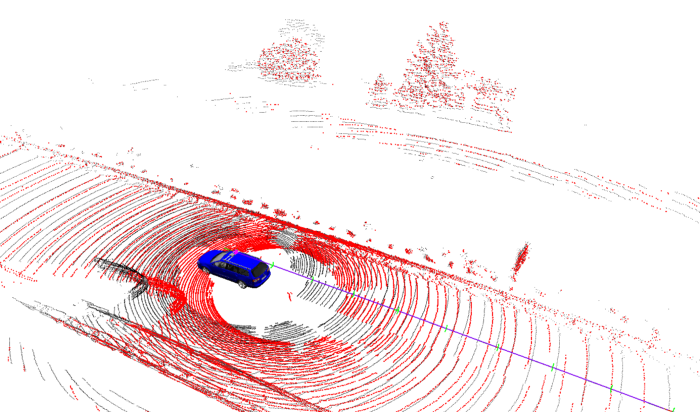

State estimation is a crucial building block of robots and autonomous cars

The problem of estimating states is called state estimation and is a central building block in robotics. It is used in a variety of different components, such as mapping, localization, or SLAM. A large number of state estimation systems perform some form of non-linear least-squares minimization. Prominent examples are the optimization of SLAM graphs, the ICP algorithm, visual odometry, or bundle adjustment.

Such systems estimate the best state as the one that is best explained by the observations, minimizing a squared error function

Those methods seek to find the state that yields the minimum of some error function computed based on the measurements. They basically answer the question: “What should the state be such that the discrepancy between what I should observe and do observe is minimized?”

Real data is noisy and contains mistakes, so-called outliers

As soon as observations from real sensors are involved, outliers will occur in the data. Outliers are severe mistakes in the measurement process, observations that are far off from what should be observed, or other gross errors of some form. Outliers are bad for state estimation systems because they introduce significant errors in the function to minimize, and even a single outlier can lead to a wrong state estimate. A common source of such outliers stems from data association mistakes, for example, when matching features. Consider the following example: if a robot sees an object that looks like the Tour Eiffel, the robot might think it is in Paris — although it might be near the fake Tour Eiffel in Las Vegas. Thus, using such a picture to localize a robot can lead to significant errors.

One solution is to ignore observations that are not well explainable

To avoid that a few or even a single of such outlier measurements have strong effects on the final solution, one can down-weight or even remove observations that do not fit the explanation computed so far. Often, so-called robust kernel functions or M-estimators are used to down-weight the effect of potential gross errors. A robust kernel can be seen as a deformation of the parabola representing the squared error function such that the error grows slower for larger discrepancies between expected and obtained observation.

Handpicking the correct robust kernel can be tricky

Several robust kernels have been developed to deal with outliers arising in different situations. Prominent examples include the Huber, Cauchy, Geman-McClure, or Welsch functions that can be used to obtain a robustified estimator by down-weighting the effect of hard-to-explain observations.

The key problem in choosing a kernel is that we do not know how likely such hard-to-explain measurements occur, i.e., “does this happen often or rarely?” Most of the time, the answer to this questions does not only depend on the sensor itself but also on the robot’s environment. Thus, it is hard to select an appropriate robust kernel before operation. In practice, the choice of the kernel is often done in a trial and error manner, as in most situations, there is no prior knowledge of the outlier process. Most fixed robust kernels chosen a-priori, however, cannot deal effectively with all conditions.

We need kernels that adapt themselves to the current situation

Thus, we should have a way to circumventing the trial and error process for choosing a kernel and realize an automatic adaptation of kernels to the outliers online. In a great 2019 CVPR paper, Jonathan Barron proposed a family of robust loss functions, which through an additional parameter, can change its shape. Through this shape parameter, one can generate several popular robust kernels such as Huber, Cauchy, Geman-McClure, Welsch, etc.

Exploiting this, we can now make the estimation of the kernel shape a part of the state estimation problem itself. The key idea of a recent work by Nived Chebrolu, Thomas Läbe, Olga Vysotska, Jens Behley, and Cyrill Stachniss is to dynamically tune this loss function automatically based on the current residual distribution such that one can blend between different robust kernels and make this choice a part of the optimization problem. Using an alternating optimization approach, one can select the proper kernel for the current outlier distribution, perform state estimation given the kernel shape, and repeat these two steps until convergence.

As a result, point cloud registration using ICP or visual SLAM and bundle adjustment benefit from this approach, especially in situations with lots of outliers, while not requiring hand-crafted outlier rejection schemes. The method can even increase the radius of convergence, for example, in bundle adjustment. This is the way to for least squares optimizers to provide more robust solutions in tricky situations and in this way enable us to build better map of the world or localize more precisely.

... see also:

... further reading:

- N. Chebrolu, T. Läbe, O. Vysotska, J. Behley, and C. Stachniss, “Adaptive Robust Kernels for Non-Linear Least Squares Problems,” IEEE Robotics and Automation Letters (RA-L), vol. 6, pp. 2240–2247, 2021. https://www.doi.org/10.1109/LRA.2021.30613312

- Jonathan T. Barron, “A General and Adaptive Robust Loss Function,” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 4331–4339